©2021 Reporters Post24. All Rights Reserved.

Google PageSpeed Insights is a tool you can use to measure the perceived latency of your website. Getting a good score here is vital because Google has announced that it will use these scores as an input into its search ranking algorithm.

We set out to see what it would take to score 100 on PageSpeed Insights on mobile. When we embarked on this effort, we already scored 100 on desktop, but modern commerce is mobile commerce, and there we only scored in the mid-60s. In this blog post, we share ways to get your site scoring 100 on mobile as well. Many companies claim 100 on desktop, but 100 on mobile is a bit of a unicorn. So let’s dive in.

Builder.io is a standard Next.js site. Because the site itself is running on the Builder content platform, the content already adheres to all of the best practices for image sizes, preloading, etc. Yet, it still only scored in the 60s. Why?

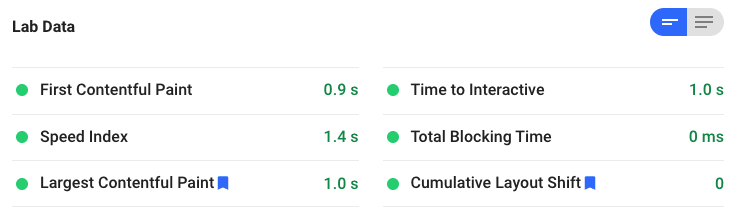

It helps to look at the breakdown which makes up the score.

The problem can be broken down to:

- TBT/TTI: The JavaScript is causing too much blocking time on the page.

- FCP/LCP: The page has too much content to render for mobile browsers.

So we should aim to:

- Decrease the amount of JavaScript.

- Decrease the amount of content for the initial render.

Why so much JavaScript?

Our homepage is essentially a static page. Why does it need JavaScript? Well, the homepage is a Next.js site, which means it is a React application (We use Mitosis to convert the output of our drag and drop editor into React). While the vast majority of the site is static, there are three things that require JavaScript:

- Navigation system: Menus require interactivity and hence JavaScript. Also, different menus are used for desktop and mobile devices.

- We need to load a chat widget

- We need Google analytics.

Let’s dive into each one separately.

Application bootstrap

Even though this is primarily a static site, it is still an application. To make the menus work, the application needs to be bootstrapped. Specifically, it needs to run rehydration where the framework compares the templates against the DOM and installs all of the DOM listeners. This process makes existing frameworks replayable. In other words, even though 95% of the page is static, the framework must download all of the templates and re-execute them to determine listeners’ presence. The implication is that the site is downloaded twice, once as HTML and then again in the form of JSX in JavaScript.

To make matters worse, the rehydration process is slow. The framework must visit each DOM node and reconcile it against the VDOM, which takes time. And the rehydration process can’t be delayed, as it is the same process that installs DOM listeners. Delaying rehydration would mean that the menus would not work.

What we are describing above is a fundamental limitation of every existing framework. You see, they are all replayable. This also means that no existing framework will allow you to score 100 on mobile on a real-world site. The amount of HTML and JavaScript is simply too great to fit into the tiny sliver that PageSpeed allots for it on mobile.

We need to fundamentally rethink the problem. Since most of the site is static, we should not have to re-download that portion in JavaScript, or pay for rehydration of something we don’t need. This is where Qwik truly shines. Qwik is resumable not replayable, and that makes all the difference. As a result, Qwik does not need to:

- Be bootstrapped on page load

- Walk the DOM to determine where the listeners are

- Eagerly download and execute JavaScript to make the menus work

All of the above means that there is practically no JavaScript to execute a site load, and yet we can retain all of the interactivity of the site.

Intercom

Intercom is a third-party widget running on our site which allows us to interact with our customers. The standard way of installing it is to drop a piece of JavaScript into your HTML, like so:

<script type="text/javascript" async="" src="https://widget.intercom.io/widget/abcd1234"></script>

<script async defer>

Intercom('boot', {app_id: 'abcd1234'}

</script>

However, there are two issues with the above:

- It adds JavaScript that needs to be downloaded and executed. This will count against our TBT/TTI.

- It may cause layout shifts, which counts against CLS. This is because the UI is first rendered without the widget and then again with it as the JavaScript is downloaded and executed.

Qwik solves both issues at the same time.

First, it grabs the DOM that Intercom uses to render the widget. Next, the DOM is inserted into the actual page, like so:

<div class="intercom-lightweight-app" aria-live="polite">

<div

class="intercom-lightweight-app-launcher intercom-launcher"

role="button"

tabIndex={0}

arial-abel="Open Intercom Messenger"

on:click='ui:boot_intercom'

>

...

</div>

<style id="intercom-lightweight-app-style" type="text/css">...</style>

</div>

The benefit of this is that the widget renders instantly with the rest of the application. There is no delay or flicker while the browser downloads the Intercom JavaScript and executes the creation of the widget. The result is a better user experience and a faster bootstrap of the website. (It also saves bandwidth on mobile devices.)

However, we still need a way to detect a click on the widget and some code to replace the mock widget with the actual Intercom widget when the user interacts with it. This is achieved with the on:click="ui:boot_intercom" attribute. The attribute tells Qwik to download boot_intercom.js if the user clicks on the mock widget.

Content of: boot_intercom.js

export default async function(element) {

await import('https://widget.intercom.io/widget/abcd1234');

const container = element.parentElement;

const body = container.parentElement;

body.removeChild(container);

Intercom('boot', { app_id: 'abcd1234' });

Intercom('show');

}

The file above downloads the real Intercom widget, removes the mock, and bootstraps Intercom. All of this happens naturally, without the user ever noticing the switcheroo.

Google Analytics

So far, we have fought a good fight in delaying JavaScript and hence improving the website’s performance. Analytics is different, as we can’t delay it and must bootstrap it immediately. Bootstrapping analytics alone would prevent us from scoring a 100 on PageSpeed Insights for mobile. To fix this, we will be running GoogleAnalytics in a Web Worker using PartyTown. More about this in a later post

JavaScript delayed

The work described above lowers the amount of JavaScript the website has to download and execute to about 1KB, which takes a mere 1ms to execute. Essentially, no time. Such a minimal amount of JavaScript is what allows us to score a perfect score on TBT/TTI.

HTML delayed

However, even with essentially no JavaScript we still can’t score 100 for mobile if we don’t fix the amount of HTML sentto the client for the above fold rendering. To improve FCP/LCP we simply must shrink that to a minimum. This is done by only sending the above the fold HTML.

This is not a new idea, but it is tough to execute. Theexisting frameworks make this difficult, as there is no easy way to break up your application into pieces that are above and below the fold. VDOM does not help here because the application generates a whole VDOM, even if only a portion of it is projected. The framework would re-create the whole site on rehydration if part of it was missing,would resulting in even more work on the initial bootstrap.

Ideally, we’d like to not ship the HTML that is below the fold, while maintaining a fully interactive menu system above the fold. In practice, this is hard to do, as can be seen by the lack of such best practices in the wild. It’s too hard to do, so no one does it.

Qwik is DOM-centric, which makes all the difference. The entire page is rendered on the server. Then, the portion of the page that does not need to be shipped is located and removed. As the user scrolls, the missing portion is lazy downloaded and inserted. Qwik doesn’t mind these kind of DOM manipulations, because it is stateless and DOM-centric.

Here is the actual code on our server that enables lazy loading of the site below the fold:

async render(): Promise<void> {

await (this.vmSandbox.require('./server-index') as ServerIndexModule).serverIndex(this);

const lazyNode = this.document.querySelector('section[lazyload=true]');

if (lazyNode) {

const lazyHTML = lazyNode.innerHTML;

lazyNode.innerHTML = '';

(lazyNode as HTMLElement).style.height = '999em';

lazyNode.setAttribute('on:document:scroll', 'ui:/lazy');

this.transpiledEsmFiles['lazy.js'] = `

export default (element) => {

element.removeAttribute('on:document:scroll');

element.style.height = null;

element.innerHTML = ${JSON.stringify(lazyHTML)};

};`;

}

}

The code is simple and to the point, yet it would be difficult to achieve with any of the existing frameworks.

Check out the below fold lazy loading in action:

Notice that the page first loads without content below the fold; as soon as the user scrolls, the content is populated. This populationis near-instant since there is no complex code to execute. Just a fast and straightforward innerHTML.

Try it out

Experience the page for yourself here: https://www.builder.io/?render=qwik. (And see the score on PageSpeed) We are still missing analytics, but that is coming soon.

Like what you see? Our plan is to make Qwik available for every Builder.io customer, so that their sites are supercharged for speed out of the box. You have never seen a platform that is this fast before.

Do you find the above exciting? Then join our team and help us make the web fast!

- Try it on StackBlitz

- Star us on github.com/builderio/qwik

- Follow us on @QwikDev and @builderio

- Chat us on Discord

- Join builder.io